Writing Effective Multiple Choice Questions

This post provides guidelines for constructing multiple choice assessment questions.

While multiple choice questions can be used to measure a number of different learning outcomes, the validity of these assessments is greatly influenced by how well the questions are constructed. Use this guide to write multiple choice questions for both lower and higher level learning outcomes.

First, let’s break down the components of a multiple choice question:

The Question Stem:

- Align the stem with a learning outcome (see Writing Learning Objectives) – assessment questions should only measure the skills you have articulated for the students. In addition, don’t use a multiple choice question when a different type of question would better assess an outcome.

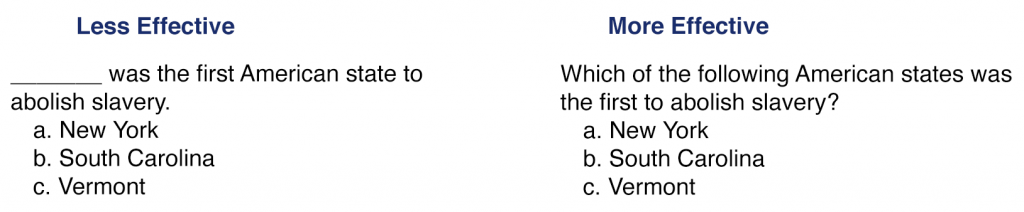

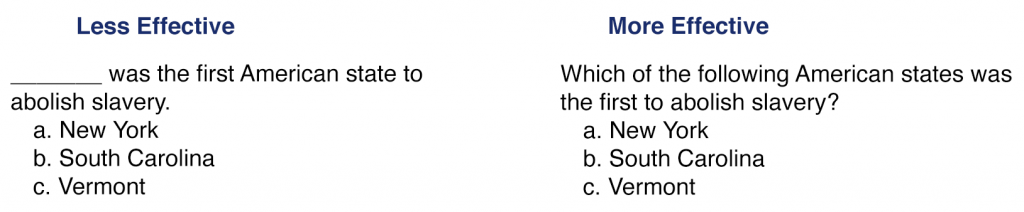

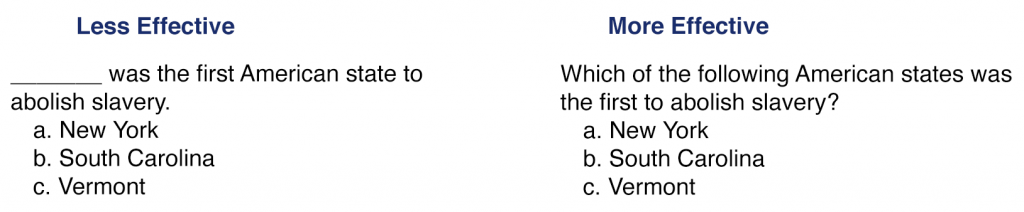

- Write the stem as a question or partial sentence, which could include graphs, maps, or other graphic material. Note: Fill in the blank type multiple choice questions place a higher cognitive load on students as they need to keep the entire question in working memory while they run each potential option through the question.

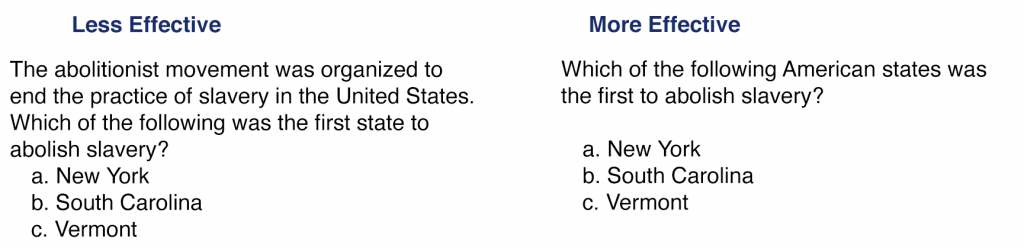

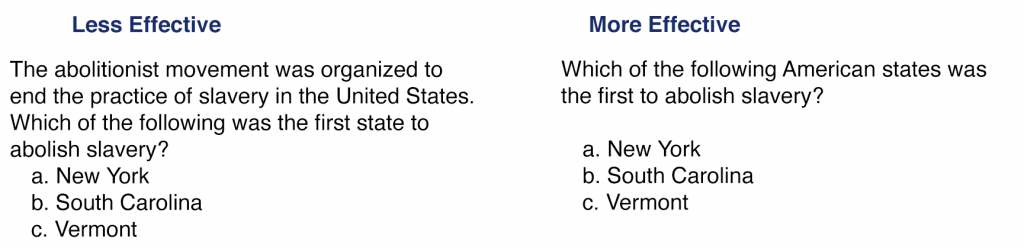

- Avoid irrelevant material – this can decrease the reliability of the assessment scores.

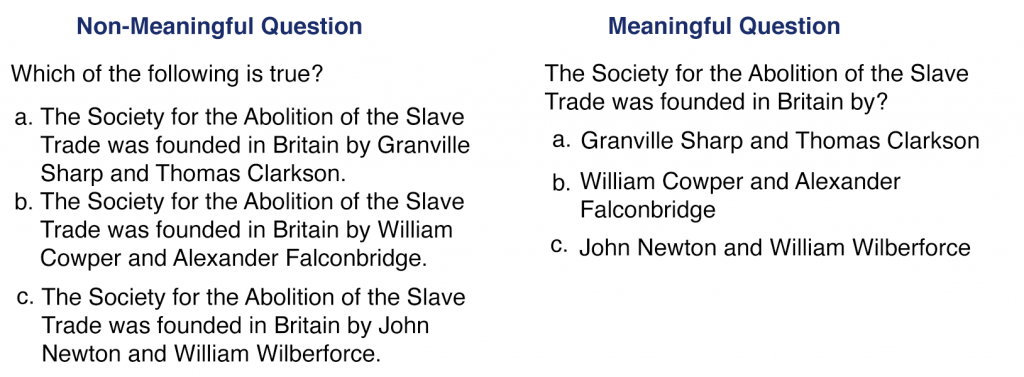

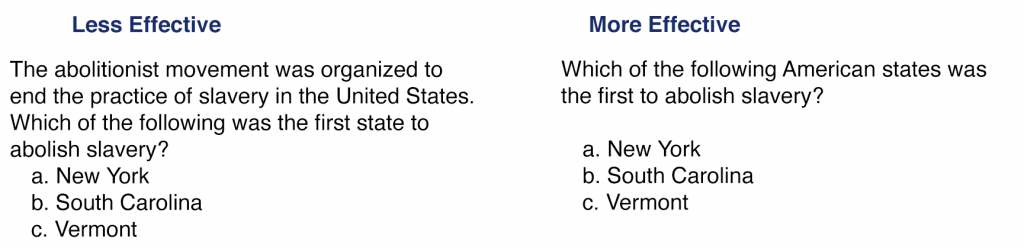

- Describe a single problem or contain the main idea in the stem/question. Students should be able to determine the problem from the question, rather than by viewing the options.

- Write the stem simply and clearly. This is particularly important for international students whose first language may not be English.

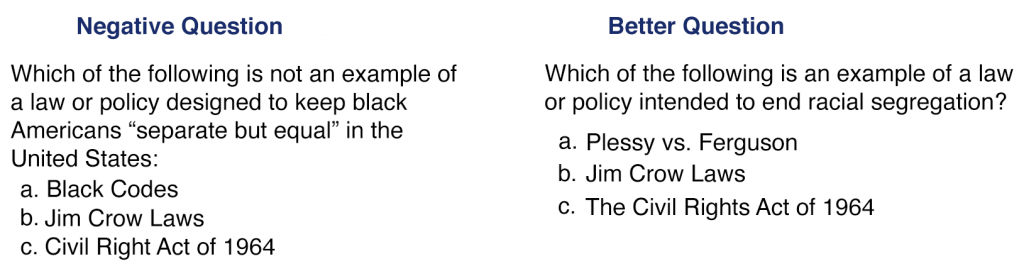

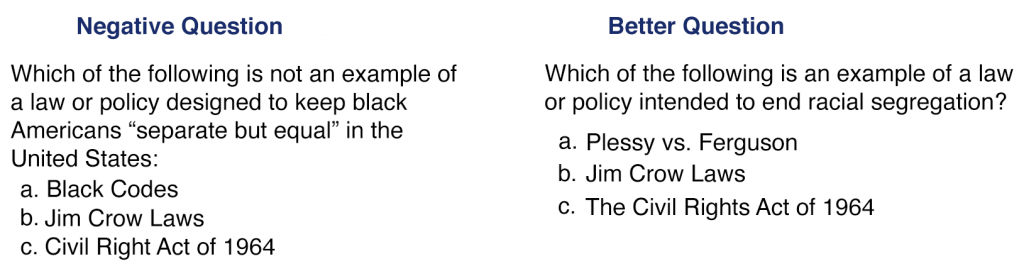

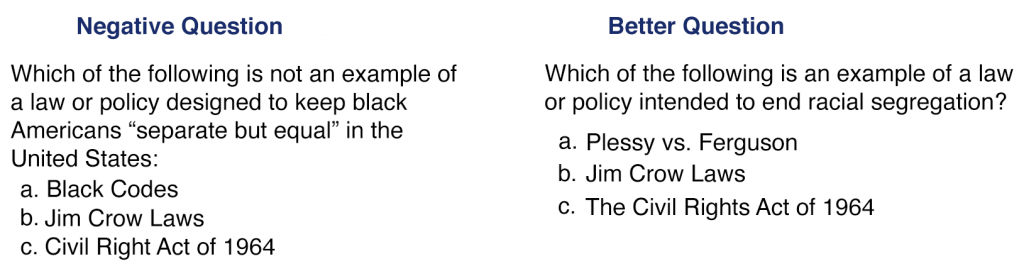

- Negatively worded questions and double negative questions “Which of the following is least unlikely to be a law or policy intended to end racial segregation?” are confusing for students, particularly for non-native English speakers. Survey scientists recommend avoiding the double negative question as a way to keep the assessment valid.

- Write Questions with only one correct or best answer.

- Make sure your grammar and spelling is correct so as not to confuse students. Students will tend to select the answer most grammatically correct, which may not be the correct answer.

The Distractors or Alternatives:

Distractors or alternatives are the answers other than the correct answer.

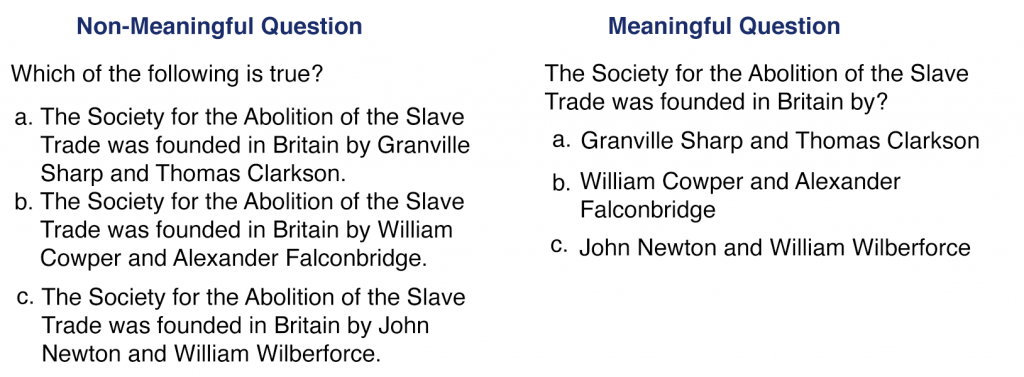

- Distractors should be plausible. Easy or silly distractors reduce the validity of the assessment.

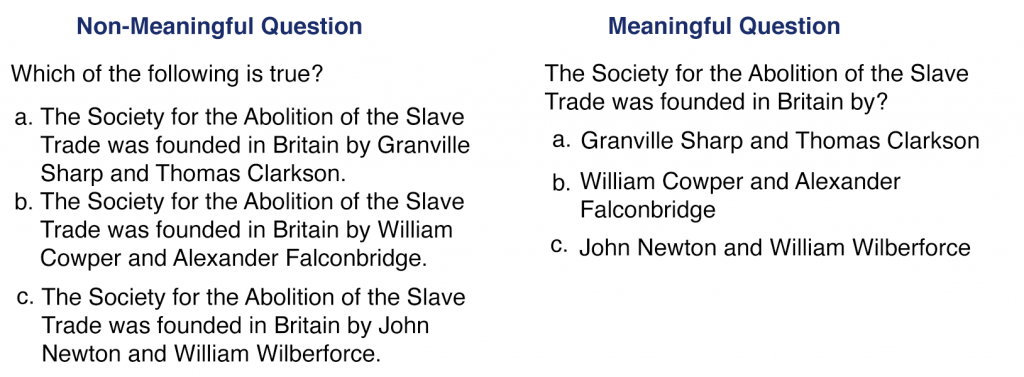

- Distractors should all be roughly the same length and level of detail. Students who are good at test-taking use answer length as a hint to the correct answer. Longer answers are often the correct answer – some students know this.

- Choose an optimal number of distractors. While there may still be some debate about this, research shows that students perform just as well on quizzes with three options (1 correct answer and two distractors) as they do with four or five options. Why? Many distractors are not chosen by students because they are not plausible, making them non-functional. Having to write fewer distractors makes constructing the quiz easier and takes less time.

- Avoid double negatives. Negatively worded questions and double negative questions “Which of the following is least unlikely to be a speculative purchase?” are confusing for students, particularly for non-native English speakers. Survey scientists recommend avoiding the double negative question as a way to keep the assessment valid.

- Avoid “all of the above” and “none of the above.” Test-takers who can identify that there are more than one correct choice will know that the answer is “all of the above.” When “none of the above” is used, the test-taker simply needs to identify whether one of the answers is correct, and if so, they know it cannot be “none of the above.” Students don’t have to look any further or think about the problem. In either case, students can use partial knowledge to arrive at a correct answer, making the quiz less valid.

- Mix up the order of answers. Instructors often make b or c the correct answer. Avoid patterns that let students correctly guess at the correct answer by mixing up the order of your options. Settings in HuskyCT:

- Avoid trick questions. The purpose of an assessment is to test a learners knowledge, not to trick students. Making a test question and answers overly complicated does NOT make the assessment more rigorous and may interfere with test validity.

Measuring higher order thinking in multiple choice questions

While many multiple choice questions are written to assess skills of knowledge and comprehension, it is also possible to write questions for higher order thinking skills.

To measure higher order thinking, consider the following:

- Write a stem which aligns with a higher level learning objective (application, analysis, evaluation, creation – Bloom’s Taxonomy framework)

- Write a stem which requires multilogical thinking: multilogical thinking requires knowledge of more than one fact to apply to the problem.

- Distractors should require a high level of examination.

A couple of ways to write higher order multiple choice questions:

- Item Flipping:

- In this type of question, present the main item in the stem, and ask the learner to identify the underlying rule or concept in the options. The learner will need to have a complete understanding of the distractor concepts to answer the question correctly.

- Use of High Quality Distractors:

- All distractors should be plausible with no hints so students are forced to evaluate each answer.

- Ask the learner to select the “best” answer, rather than the correct option. Make sure the correct answer is ‘more correct’ than the distractors.

- Ask students to explain the correct answer choice or why the incorrect answers do not apply using interpretation of graphical information, e.g. data of a relevant experiment.

References:

Chiavaroli, Neville (2017) “Negatively-Worded Multiple Choice Questions: An Avoidable Threat to Validity,” Practical Assessment, Research, and Evaluation: Vol. 22 , Article 3.

Haladyna, Thomas M., Steven M. Downing, and Michael C. Rodriguez. “A review of multiple-choice item-writing guidelines for classroom assessment.” Applied measurement in education 15.3 (2002): 309-333.

Scully, Darina (2017) “Constructing Multiple-Choice Items to Measure Higher-Order Thinking,” Practical Assessment, Research, and Evaluation: Vol. 22 , Article 4.